12/16/2014

PITTSBURGH, Dec. 16, 2014 – In another demonstration that brain-computer interface technology has the potential to improve the function and quality of life of those unable to use their own arms, a woman with quadriplegia shaped the almost human hand of a robot arm with just her thoughts to pick up big and small boxes, a ball, an oddly shaped rock, and fat and skinny tubes.

The findings by researchers at the University of Pittsburgh School of Medicine, published online today in the Journal of Neural Engineering, describe, for the first time, 10-degree brain control of a prosthetic device in which the trial participant used the arm and hand to reach, grasp, and place a variety of objects.

“Our project has shown that we can interpret signals from neurons with a simple computer algorithm to generate sophisticated, fluid movements that allow the user to interact with the environment,” said senior investigator Jennifer Collinger, Ph.D., assistant professor, Department of Physical Medicine and Rehabilitation (PM&R), Pitt School of Medicine, and research scientist for the VA Pittsburgh Healthcare System.

In February 2012, small electrode grids with 96 tiny contact points each were surgically implanted in the regions of trial participant Jan Scheuermann’s brain that would normally control her right arm and hand movement. Each electrode point picked up signals from an individual neuron, which were then relayed to a computer to identify the firing patterns associated with particular observed or imagined movements, such as raising or lowering the arm, or turning the wrist. That “mind-reading” was used to direct the movements of a prosthetic arm developed by Johns Hopkins Applied Physics Laboratory.

Within a week of the surgery, Ms. Scheuermann could reach in and out, left and right, and up and down with the arm to achieve 3D control, and before three months had passed, she also could flex the wrist back and forth, move it from side to side and rotate it clockwise and counter-clockwise, as well as grip objects, adding up to 7D control. Those findings were published in The Lancet in 2012.

“In the next part of the study, described in this new paper, Jan mastered 10D control, allowing her to move the robot hand into different positions while also controlling the arm and wrist,” said Michael Boninger, M.D., professor and chair, PM&R, and director of the UPMC Rehabilitation Institute.

To bring the total of arm and hand movements to 10, the simple pincer grip was replaced by four hand shapes: finger abduction, in which the fingers are spread out; scoop, in which the last fingers curl in; thumb opposition, in which the thumb moves outward from the palm; and a pinch of the thumb, index and middle fingers. As before, Ms. Scheuermann watched animations of and imagined the movements while the team recorded the signals her brain was sending in a process called calibration. Then, they used what they had learned to read her thoughts so she could move the hand into the various positions.

“Jan used the robot arm to grasp more easily when objects had been displayed during the preceding calibration, which was interesting,” said co-investigator Andrew Schwartz, Ph.D., professor of Neurobiology, Pitt School of Medicine. “Overall, our results indicate that highly coordinated, natural movement can be restored to people whose arms and hands are paralyzed.”

After surgery in October to remove the electrode arrays, Ms. Scheuermann concluded her participation in the study.

“This is been a fantastic, thrilling, wild ride, and I am so glad I’ve done this,” she said. “This study has enriched my life, given me new friends and coworkers, helped me contribute to research and taken my breath away. For the rest of my life, I will thank God every day for getting to be part of this team.”

The team included John E. Downey, BS, Elizabeth Tyler-Kabara, M.D., Ph.D., and Michael Boninger, M.D., all of the University of Pittsburgh School of Medicine; and lead author Brian Wodlinger, Ph.D., now of Imagistx, Inc. The project was funded by the Defense Advanced Research Projects Agency, the Department of Veterans Affairs, and the UPMC Rehabilitation Institute.

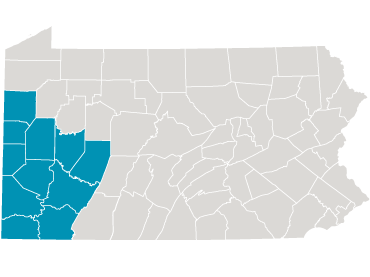

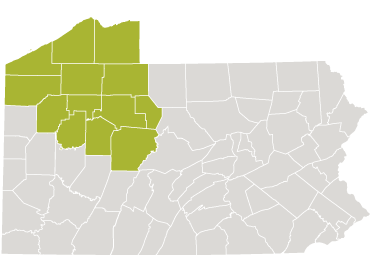

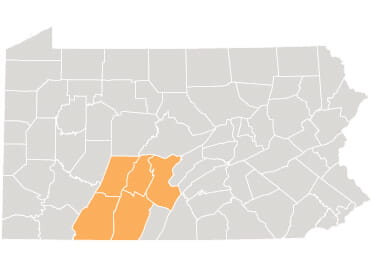

Controlling the robot arm with her thoughts, the participant shaped the hand into four positions: fingers spread, scoop, pinch and thumb up.

Photo credit: Journal of Neural Engineering/IOP Publishing